4.0 Lession 4 透视投影

[TOC]

ViewPort

我们之前将顶点坐标[-1,1]映射到[0,width][0,height]时使用的是

Vec3i ((v.x + 1.) /2. * image.get_width(), (v.y + 1.) /2. * image.get_height(), (v.z+1.)*depth/2.);

这种可以通过Matrix实现

Vec3f v = model.vert(face, j);

uv[j] = model.uv(face, j);

Vec3f tmp = m2v(viewportMatrix * v);

screenCordinates[j] = Vec3i (tmp[0], tmp[1], tmp[2]);

Matrix viewportMatrix = viewPort(width / 2, height / 2, width, height, depth);

Matrix viewPort(int startx, int starty, int endx, int endy, int depth){

Matrix matrix = Matrix::identity(4);

int width = endx - startx;

int height = endy - starty;

//-1 ~ 1 映射到 startx, starty, endx, endy,以x为例,相当于 先+1,×width然后除2,再平移startx的距离

matrix[0][0] = (float)width/2;

matrix[0][3] = (float)(width + 2*startx) / 2.f;

matrix[1][1] = (float)height/2;

matrix[1][3] = (float)(height + 2*starty) / 2.f;

matrix[2][2] = (float)depth/2;

matrix[2][3] = (float)depth/2;

return matrix;

}

Vec3f m2v(Matrix matrix) {

return Vec3f(matrix[0][0]/matrix[3][0], matrix[1][0]/matrix[3][0], matrix[1][0]/matrix[3][0]);

}

感兴趣可以尝试导一下,一样的道理。

投影

正交投影

我的理解:其实ViewPort实际上是一个正交投影

正交投影是一种比较简单的投影,所有的投影线都与最终的绘图表面垂直。直接将坐标映射到屏幕的二维平面内,但实际上一个直接的投影矩阵将会产生不真实的结果。

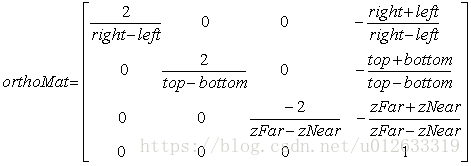

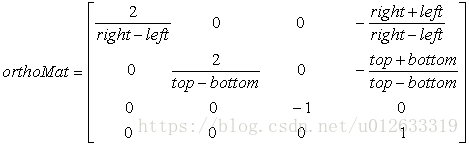

正交投影矩阵

3d

2d

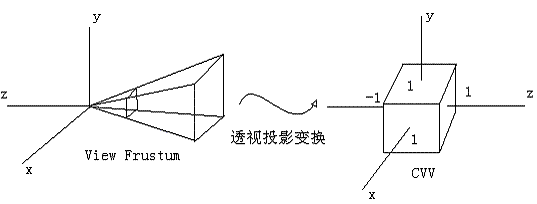

透视投影

正交投影得到的结果是无法区分物体远近的,因为所有的投影线都是垂直的,透视投影带有远近的效果,更真实。

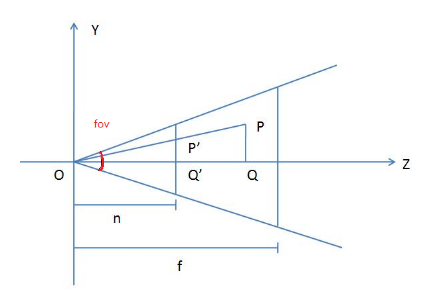

一个平截头体可以有近平面、远平面、裁剪面的宽高比、fov(纵向视角大小)构成。物体在平截头体内投影到近平面上,看到一篇文章一句话很好

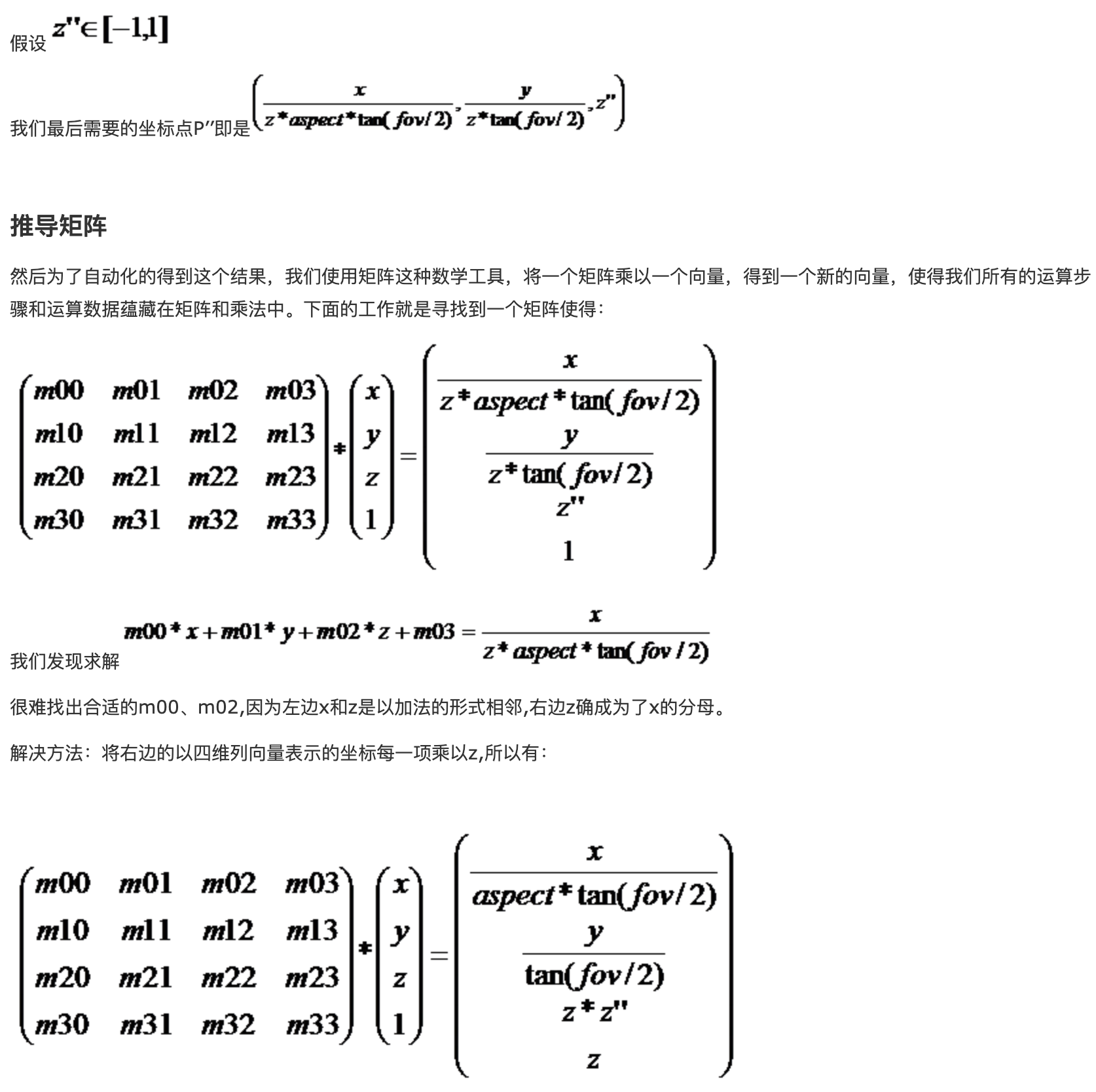

透视投影矩阵的任务就是把位于视锥体内的物体的顶点X,Y,Z坐标映射到[-1,1]范围。这就相当于把这个四棱台扭曲变形成一个立方体。

这就很好理解为什么近平面的物体看起来更大。

推导

有一点P,位于视锥体内,设坐标为(x,y,z).分别对x,y坐标和z坐标的变换到[-1,1]:

- 设近裁剪面的宽度为W,高度为H,P’点的x坐标范围是[-W/2,W/2],y坐标范围是[-H/2,H/2],然后分别线性映射至[-1,1]内即可。

- z坐标的范围是N至F,需要映射到[-1,1]

参数:

- Fov:纵向的视角大小

- aspect:裁剪面的宽高比

- zNear:近裁剪面离摄像机的距离,图中的n

- zFar:远裁剪面离摄像机的距离,图中的f

求得点P在近裁剪面的投影点P’的坐标

根据相似三角形对应边长度的比率相同,由图可得

上图[2,2]的分子写反了。

假设如果设定近平面的顶边的Y值为t,上述的矩阵可以变化为

aspect = w/h;

tan(fov/2)=t/n

那么[0][0]变成 h*n /(w*t), h/t =2

所以

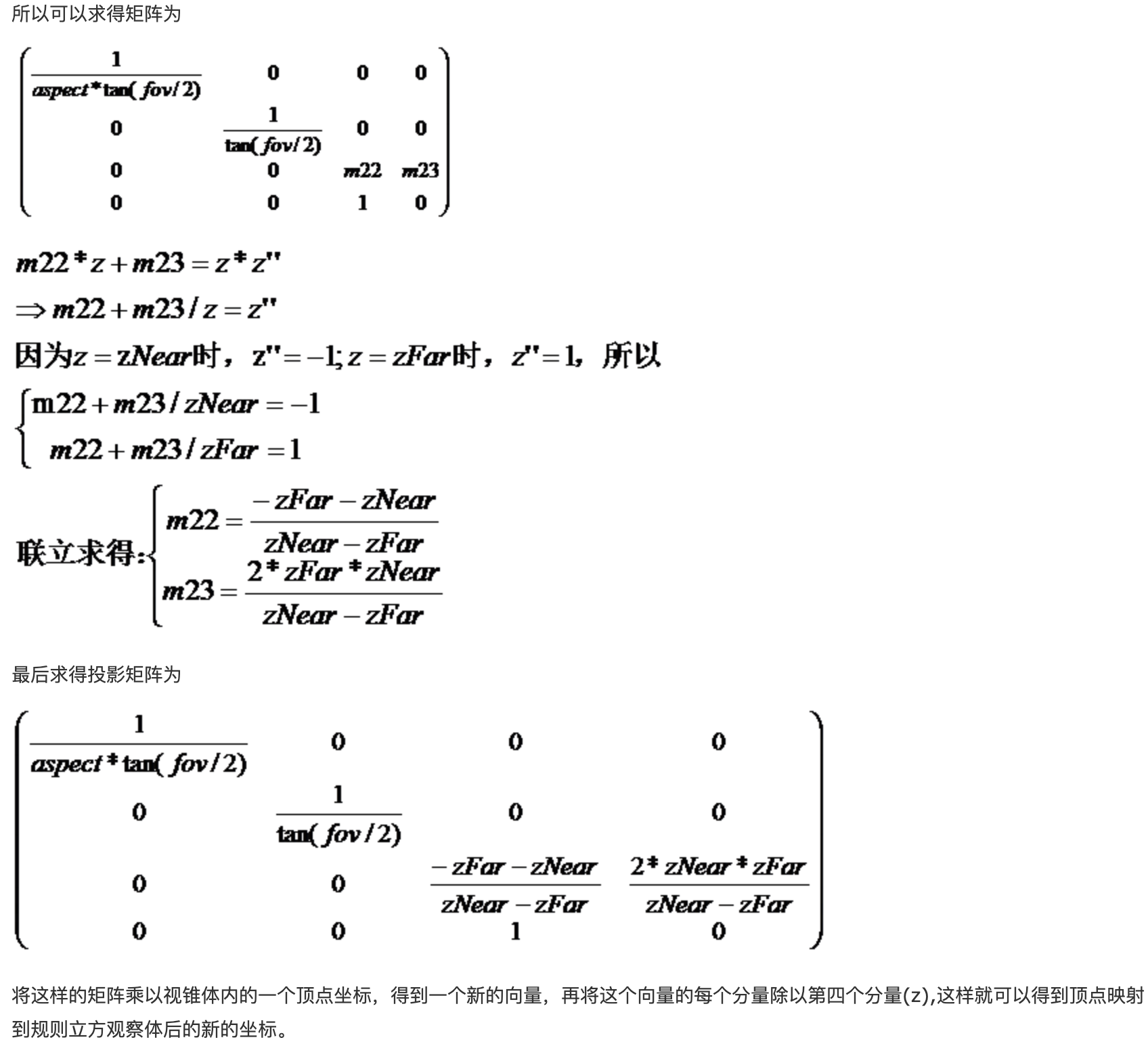

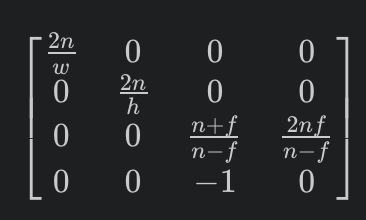

透视投影矩阵

Matrix Matrix::initPersProjMatrix(float FOV, const float aspect, float zNear, float zFar) {

Matrix matrix(4, 4);

const float zRange = zNear - zFar;

const float tanHalfFOV = std::tan(toRadian(FOV / 2.0f));

matrix[0][0] = 1.0f / (tanHalfFOV * aspect);

matrix[1][1] = 1.0f / tanHalfFOV;

matrix[2][2] = (-zNear - zFar) / zRange;

matrix[2][3] = 2.0f * zFar * zNear / zRange;

matrix[3][2] = 1.f;

return matrix;

}

透视除法

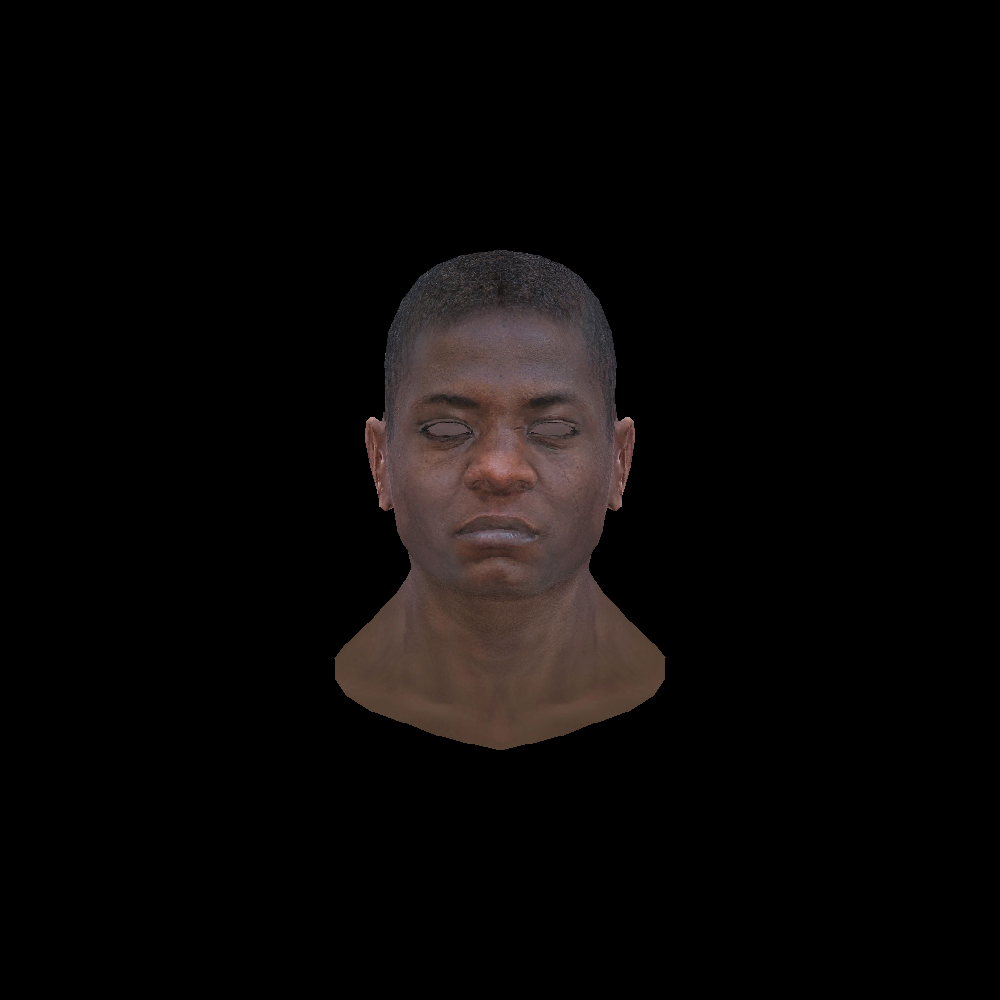

示例

Matrix projectMatrix = Matrix::initPersProjMatrix(30, 1, 3, -3);

int width = 1000;

int height = 1000;

int depth = 255;

TGAImage image(width, height, TGAImage::RGB);

Model model("../obj/african_head.obj");

Vec3f lightDir(0, 0, -1);

Matrix viewportMatrix = viewPort(width / 4, height / 4, width * 3.f / 4, height* 3.f / 4, depth);

Matrix projectMatrix = Matrix::initPersProjMatrix(30, 1, 3, -3);

int* zbuffer = new int[width*height];

for (int i = 0; i < width * height; i++) {

zbuffer[i] = std::numeric_limits<int>::min();

}

for (int face = 0; face < model.nfaces(); face++) {

Vec3i screenCordinates[3];

Vec3f worldCordinates[3];

Vec2i uv[3];

for (int j = 0; j < 3; j++) {

Vec3f v = model.vert(face, j);

worldCordinates[j] = v;

}

Vec3f norm = (worldCordinates[2] - worldCordinates[0]) ^(worldCordinates[1] - worldCordinates[0]);

norm.normalize();

float intensity = norm.dotProduct(lightDir);

if (intensity <= 0) {

continue;

}

for (int j = 0; j < 3; j++) {

Vec3f v = model.vert(face, j);

uv[j] = model.uv(face, j);

std::cout<<v;

Matrix tmp = projectMatrix * v;

//透视除法

tmp[0][0] /= tmp[3][0];

tmp[1][0] /= tmp[3][0];

tmp[2][0] /= tmp[3][0];

Vec3f tmp2 = m2v(viewportMatrix * tmp);

screenCordinates[j] = Vec3i (tmp2[0], tmp2[1], tmp2[2]);

}

if (intensity > 0) {

triangle_line_sweeping_texture_3d(screenCordinates[0], screenCordinates[1], screenCordinates[2], uv[0],

uv[1], uv[2], &model, intensity, image, zbuffer);

}

}

image.flip_vertically();

image.write_tga_file("l4_5_viewport_perspective_project.tga");

平移

int width = 1000;

int height = 1000;

int depth = 255;

TGAImage image(width, height, TGAImage::RGB);

Model model("../obj/african_head.obj");

Vec3f lightDir(0, 0, -1);

Vec3f camera(0, 0, 3);

Matrix viewportMatrix = viewPort(width / 2, height / 2, width, height, depth);

Matrix projectMatrix = Matrix::initPersProjMatrix(90, 1, 100, 1000);

//注意这里的平移矩阵

Matrix tansMatrix = Matrix::identity(4);

tansMatrix[0][3] = - width / 4;

tansMatrix[1][3] = - height / 4 ;

int* zbuffer = new int[width*height];

for (int i = 0; i < width * height; i++) {

zbuffer[i] = std::numeric_limits<int>::min();

}

for (int face = 0; face < model.nfaces(); face++) {

Vec3i screenCordinates[3];

Vec3f worldCordinates[3];

Vec2i uv[3];

for (int j = 0; j < 3; j++) {

Vec3f v = model.vert(face, j);

uv[j] = model.uv(face, j);

//注意顺序

Vec3f tmp = m2v(tansMatrix * viewportMatrix * v);

screenCordinates[j] = Vec3i (tmp[0], tmp[1], tmp[2]);

worldCordinates[j] = v;

}

Vec3f norm = (worldCordinates[2] - worldCordinates[0]) ^(worldCordinates[1] - worldCordinates[0]);

norm.normalize();

float intensity = norm.dotProduct(lightDir);

if (intensity > 0) {

triangle_line_sweeping_texture_3d(screenCordinates[0], screenCordinates[1], screenCordinates[2], uv[0],

uv[1], uv[2], &model, intensity, image, zbuffer);

}

}

image.flip_vertically();

image.write_tga_file("l4_5_viewport_translate.tga");